Recently i came across this Little MCU: CH32V003. It’s RISC-V MCU, having small flash and RAM. My Mcu is So8 variant with 16KB of flash and 2 KB of ram, even smaller than the Atmega328 on Arduino Uno, but that maybe enough for some usecases.

So let setup the software.

WCH has an IDE called moun river. While it’s on the better side of vendor-provided IDE(Thanks God it’s based on modern VSCode, instead of that Eclipse), i still want a setup that is not vendor dependent. I decided to use docker to containize my setup and be able to quickly recover if i screw up something.

Quick search on the internet, there is already a docker image that include toolchain and necessary tool to work with this MCU and WCH-LinkE(The debugger): https://hub.docker.com/r/islandc/wch-riscv-devcontainer. But unfortunately, this image does not include SDK, so we will have to pull the SDK later.

We also use VScode dev container extension to setup dev enviroment inside that container.

The first step we need is creating a directory that is our project root, in this example, i will called it hello_world.

Next, cd to proj root and create a dir called ‘.devcontainer’, this store our docker config file.

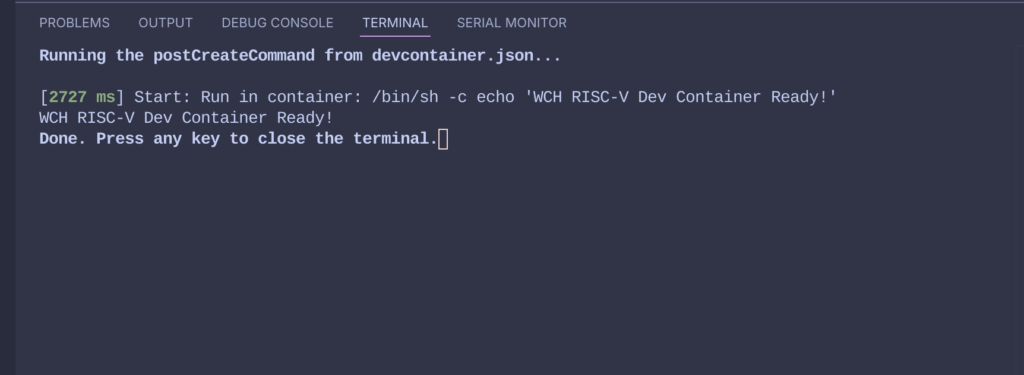

Then add devcontainer.json to tell dev container ext how to setup the image. I ask Claude for this because tbh i’m not expert in Docker.

{

"name": "WCH RISC-V Development",

"image": "islandc/wch-riscv-devcontainer:latest",

// Features and settings

"features": {},

// VS Code customizations

"customizations": {

"vscode": {

"extensions": [

"ms-vscode.cpptools",

"ms-vscode.cmake-tools",

"marus25.cortex-debug"

],

"settings": {

"cmake.configureOnOpen": true,

"C_Cpp.default.configurationProvider": "ms-vscode.cmake-tools"

}

}

},

// Container configuration

"privileged": true, // Required for USB debugging

"mounts": [

// Mount USB devices for debugging

"source=/dev,target=/dev,type=bind"

],

// Port forwarding (if needed)

"forwardPorts": [],

// Post-create command

"postCreateCommand": "echo 'WCH RISC-V Dev Container Ready!'",

// Keep container running

"overrideCommand": false,

// User settings

"remoteUser": "root"

}Now use Dev Container ext and “reopen in container”, in theory, it should work fine, and we are in the container now

Next part will be pulling the SDK and setup a hello_world project